Executors#

Synchronous Base Executor Class#

- class covalent.executor.base.BaseExecutor(*args, **kwargs)[source]#

Base executor class to be used for defining any executor plugin. Subclassing this class will allow you to define your own executor plugin which can be used in covalent.

- log_stdout#

The path to the file to be used for redirecting stdout.

- log_stderr#

The path to the file to be used for redirecting stderr.

- cache_dir#

The location used for cached files in the executor.

- time_limit#

time limit for the task

- retries#

Number of times to retry execution upon failure

Methods:

cancel(task_metadata, job_handle)Method to cancel the job identified uniquely by the job_handle (base class)

execute(function, args, kwargs, dispatch_id, …)Execute the function with the given arguments.

from_dict(object_dict)Rehydrate a dictionary representation

Check if the task was requested to be cancelled by the user

get_dispatch_context(dispatch_info)Start a context manager that will be used to access the dispatch info for the executor.

Query the database for the task’s Python and Covalent version

run(function, args, kwargs, task_metadata)Abstract method to run a function in the executor.

send(task_specs, resources, task_group_metadata)Submit a list of task references to the compute backend.

set_job_handle(handle)Save the job_id/handle returned by the backend executing the task

set_job_status(status)Sets the job state

setup(task_metadata)Placeholder to run any executor specific tasks

teardown(task_metadata)Placeholder to run any executor specific cleanup/teardown actions

to_dict()Return a JSON-serializable dictionary representation of self

validate_status(status)Overridable filter

write_streams_to_file(stream_strings, …)Write the contents of stdout and stderr to respective files.

- cancel(task_metadata, job_handle)[source]#

Method to cancel the job identified uniquely by the job_handle (base class)

- Arg(s)

task_metadata: Metadata of the task to be cancelled job_handle: Unique ID of the job assigned by the backend

- Return(s)

False by default

- Return type

bool

- execute(function, args, kwargs, dispatch_id, results_dir, node_id=- 1)[source]#

Execute the function with the given arguments.

This calls the executor-specific run() method.

- Parameters

function (

Callable) – The input python function which will be executed and whose result is ultimately returned by this function.args (

List) – List of positional arguments to be used by the function.kwargs (

Dict) – Dictionary of keyword arguments to be used by the function.dispatch_id (

str) – The unique identifier of the external lattice process which is calling this function.results_dir (

str) – The location of the results directory.node_id (

int) – ID of the node in the transport graph which is using this executor.

- Returns

The result of the function execution.

- Return type

output

- from_dict(object_dict)#

Rehydrate a dictionary representation

- Parameters

object_dict (

dict) – a dictionary representation returned by to_dict- Return type

- Returns

self

Instance attributes will be overwritten.

- get_cancel_requested()[source]#

Check if the task was requested to be cancelled by the user

- Arg(s)

None

- Return(s)

True/False whether task cancellation was requested

- Return type

bool

- get_dispatch_context(dispatch_info)#

Start a context manager that will be used to access the dispatch info for the executor.

- Parameters

dispatch_info (

DispatchInfo) – The dispatch info to be used inside current context.- Return type

AbstractContextManager[DispatchInfo]- Returns

A context manager object that handles the dispatch info.

- get_version_info()[source]#

Query the database for the task’s Python and Covalent version

- Arg:

dispatch_id: Dispatch ID of the lattice

- Returns

python_version, “covalent”: covalent_version}

- Return type

{“python”

- abstract run(function, args, kwargs, task_metadata)[source]#

Abstract method to run a function in the executor.

- Parameters

function (

Callable) – The function to run in the executorargs (

List) – List of positional arguments to be used by the functionkwargs (

Dict) – Dictionary of keyword arguments to be used by the function.task_metadata (

Dict) – Dictionary of metadata for the task. Current keys are dispatch_id and node_id

- Returns

The result of the function execution

- Return type

output

- async send(task_specs, resources, task_group_metadata)[source]#

Submit a list of task references to the compute backend.

- Parameters

task_specs (

List[Dict]) – a list of TaskSpecsresources (

ResourceMap) – a ResourceMap mapping task assets to URIstask_group_metadata (

Dict) – a dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.

The return value of send() will be passed directly into poll().

- set_job_handle(handle)[source]#

Save the job_id/handle returned by the backend executing the task

- Arg(s)

handle: Any JSONable type to identifying the task being executed by the backend

- Return(s)

Response from saving the job handle to database

- Return type

Any

- async set_job_status(status)[source]#

Sets the job state

For use with send/receive API

- Return(s)

Whether the action succeeded

- Return type

bool

- teardown(task_metadata)[source]#

Placeholder to run any executor specific cleanup/teardown actions

- Return type

Any

- to_dict()#

Return a JSON-serializable dictionary representation of self

- Return type

dict

- write_streams_to_file(stream_strings, filepaths, dispatch_id, results_dir)[source]#

Write the contents of stdout and stderr to respective files.

- Parameters

stream_strings (

Iterable[str]) – The stream_strings to be written to files.filepaths (

Iterable[str]) – The filepaths to be used for writing the streams.dispatch_id (

str) – The ID of the dispatch which initiated the request.results_dir (

str) – The location of the results directory.

- Return type

None

Asynchronous Base Executor Class#

- class covalent.executor.base.AsyncBaseExecutor(*args, **kwargs)[source]#

Async base executor class to be used for defining any executor plugin. Subclassing this class will allow you to define your own executor plugin which can be used in covalent.

This is analogous to BaseExecutor except the run() method, together with the optional setup() and teardown() methods, are coroutines.

- log_stdout#

The path to the file to be used for redirecting stdout.

- log_stderr#

The path to the file to be used for redirecting stderr.

- cache_dir#

The location used for cached files in the executor.

- time_limit#

time limit for the task

- retries#

Number of times to retry execution upon failure

Methods:

cancel(task_metadata, job_handle)Method to cancel the job identified uniquely by the job_handle (base class)

from_dict(object_dict)Rehydrate a dictionary representation

Get if the task was requested to be canceled

get_dispatch_context(dispatch_info)Start a context manager that will be used to access the dispatch info for the executor.

Query the database for dispatch version metadata.

poll(task_group_metadata, data)Block until the job has reached a terminal state.

receive(task_group_metadata, data)Return a list of task updates.

run(function, args, kwargs, task_metadata)Abstract method to run a function in the executor in async-aware manner.

send(task_specs, resources, task_group_metadata)Submit a list of task references to the compute backend.

set_job_handle(handle)Save the job handle to database

set_job_status(status)Validates and sets the job state

setup(task_metadata)Executor specific setup method

teardown(task_metadata)Executor specific teardown method

to_dict()Return a JSON-serializable dictionary representation of self

validate_status(status)Overridable filter

write_streams_to_file(stream_strings, …)Write the contents of stdout and stderr to respective files.

- async cancel(task_metadata, job_handle)[source]#

Method to cancel the job identified uniquely by the job_handle (base class)

- Arg(s)

task_metadata: Metadata of the task to be cancelled job_handle: Unique ID of the job assigned by the backend

- Return(s)

False by default

- Return type

bool

- from_dict(object_dict)#

Rehydrate a dictionary representation

- Parameters

object_dict (

dict) – a dictionary representation returned by to_dict- Return type

- Returns

self

Instance attributes will be overwritten.

- async get_cancel_requested()[source]#

Get if the task was requested to be canceled

- Arg(s)

None

- Return(s)

Whether the task has been requested to be cancelled

- Return type

Any

- get_dispatch_context(dispatch_info)#

Start a context manager that will be used to access the dispatch info for the executor.

- Parameters

dispatch_info (

DispatchInfo) – The dispatch info to be used inside current context.- Return type

AbstractContextManager[DispatchInfo]- Returns

A context manager object that handles the dispatch info.

- async get_version_info()[source]#

Query the database for dispatch version metadata.

- Arg:

dispatch_id: Dispatch ID of the lattice

- Returns

python_version, “covalent”: covalent_version}

- Return type

{“python”

- async poll(task_group_metadata, data)[source]#

Block until the job has reached a terminal state.

- Parameters

task_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.data (

Any) – The return value of send().

The return value of poll() will be passed directly into receive().

Raise NotImplementedError to indicate that the compute backend will notify the Covalent server asynchronously of job completion.

- Return type

Any

- async receive(task_group_metadata, data)[source]#

Return a list of task updates.

Each task must have reached a terminal state by the time this is invoked.

- Parameters

task_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.data (

Any) – The return value of poll() or the request body of /jobs/update.

- Return type

List[TaskUpdate]- Returns

Returns a list of task results, each a TaskUpdate dataclass of the form

- {

“dispatch_id”: dispatch_id, “node_id”: node_id, “status”: status, “assets”: {

- ”output”: {

“remote_uri”: output_uri,

}, “stdout”: {

”remote_uri”: stdout_uri,

}, “stderr”: {

”remote_uri”: stderr_uri,

},

},

}

corresponding to the node ids (task_ids) specified in the task_group_metadata. This might be a subset of the node ids in the originally submitted task group as jobs may notify Covalent asynchronously of completed tasks before the entire task group finishes running.

- abstract async run(function, args, kwargs, task_metadata)[source]#

Abstract method to run a function in the executor in async-aware manner.

- Parameters

function (

Callable) – The function to run in the executorargs (

List) – List of positional arguments to be used by the functionkwargs (

Dict) – Dictionary of keyword arguments to be used by the function.task_metadata (

Dict) – Dictionary of metadata for the task. Current keys are dispatch_id and node_id

- Returns

The result of the function execution

- Return type

output

- async send(task_specs, resources, task_group_metadata)[source]#

Submit a list of task references to the compute backend.

- Parameters

task_specs (

List[TaskSpec]) – a list of TaskSpecsresources (

ResourceMap) – a ResourceMap mapping task assets to URIstask_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.

The return value of send() will be passed directly into poll().

- Return type

Any

- async set_job_handle(handle)[source]#

Save the job handle to database

- Arg(s)

handle: JSONable type identifying the job being executed by the backend

- Return(s)

Response from the listener that handles inserting the job handle to database

- Return type

Any

- async set_job_status(status)[source]#

Validates and sets the job state

For use with send/receive API

- Return(s)

Whether the action succeeded

- Return type

bool

- to_dict()#

Return a JSON-serializable dictionary representation of self

- Return type

dict

- async write_streams_to_file(stream_strings, filepaths, dispatch_id, results_dir)[source]#

Write the contents of stdout and stderr to respective files.

- Parameters

stream_strings (

Iterable[str]) – The stream_strings to be written to files.filepaths (

Iterable[str]) – The filepaths to be used for writing the streams.dispatch_id (

str) – The ID of the dispatch which initiated the request.results_dir (

str) – The location of the results directory.

This uses aiofiles to avoid blocking the event loop.

- Return type

None

Dask Executor#

Executing tasks (electrons) in a Dask cluster. This is the default executor when covalent is started without the --no-cluster flag.

from dask.distributed import LocalCluster

cluster = LocalCluster()

print(cluster.scheduler_address)

The address will look like tcp://127.0.0.1:55564 when running locally. Note that the Dask cluster does not persist when the process terminates.

This cluster can be used with Covalent by providing the scheduler address:

import covalent as ct

dask_executor = ct.executor.DaskExecutor(

scheduler_address="tcp://127.0.0.1:55564"

)

@ct.electron(executor=dask_executor)

def my_custom_task(x, y):

return x + y

...

Local Executor#

Executing tasks (electrons) directly on the local machine

AWS Plugins#

Covalent is a python based workflow orchestration tool used to execute HPC and quantum tasks in heterogenous environments.

By installing Covalent AWS Plugins users can leverage a broad plugin ecosystem to execute tasks using AWS resources best fit for each task.

Covalent AWS Plugins installs a set of executor plugins that allow tasks to be run in an EC2 instance, AWS Lambda, AWS ECS Cluster, AWS Batch Compute Environment, and as an AWS Braket Job for tasks requiring Quantum devices.

If you’re new to Covalent see the Getting Started Guide.

1. Installation#

To use the AWS plugin ecosystem with Covalent, simply install it with pip:

pip install "covalent-aws-plugins[all]"

This will ensure that all the AWS executor plugins listed below are installed.

Note

Users will require Terraform to be installed in order to use the EC2 plugin.

2. Included Plugins#

While each plugin can be seperately installed installing the above pip package installs all of the below plugins.

Plugin Name |

Use Case |

|

|---|---|---|

|

AWS Batch Executor |

Useful for heavy compute workloads (high CPU/memory). Tasks are queued to execute in the user defined Batch compute environment. |

|

AWS EC2 Executor |

General purpose compute workloads where users can select compute resources. An EC2 instance is auto-provisioned using terraform with selected compute settings to execute tasks. |

|

AWS Braket Executor |

Suitable for Quantum/Classical hybrid workflows. Tasks are executed using a combination of classical and quantum devices. |

|

AWS ECS Executor |

Useful for moderate to heavy workloads (low memory requirements). Tasks are executed in an AWS ECS cluster as containers. |

|

AWS Lambda Executor |

Suitable for short lived tasks that can be parallalized (low memory requirements). Tasks are executed in serverless AWS Lambda functions. |

3. Usage Example#

Firstly, import covalent

import covalent as ct

Secondly, define your executor

executor = ct.executor.AWSBatchExecutor(

s3_bucket_name = "covalent-batch-qa-job-resources",

batch_job_definition_name = "covalent-batch-qa-job-definition",

batch_queue = "covalent-batch-qa-queue",

batch_execution_role_name = "ecsTaskExecutionRole",

batch_job_role_name = "covalent-batch-qa-job-role",

batch_job_log_group_name = "covalent-batch-qa-log-group",

vcpu = 2, # Number of vCPUs to allocate

memory = 3.75, # Memory in GB to allocate

time_limit = 300, # Time limit of job in seconds

)

executor = ct.executor.EC2Executor(

instance_type="t2.micro",

volume_size=8, #GiB

ssh_key_file="~/.ssh/ec2_key"

)

executor = ct.executor.BraketExecutor(

s3_bucket_name="braket_s3_bucket",

ecr_repo_name="braket_ecr_repo",

braket_job_execution_role_name="covalent-braket-iam-role",

quantum_device="arn:aws:braket:::device/quantum-simulator/amazon/sv1",

classical_device="ml.m5.large",

storage=30,

)

executor = ct.executor.ECSExecutor(

s3_bucket_name="covalent-fargate-task-resources",

ecr_repo_name="covalent-fargate-task-images",

ecs_cluster_name="covalent-fargate-cluster",

ecs_task_family_name="covalent-fargate-tasks",

ecs_task_execution_role_name="ecsTaskExecutionRole",

ecs_task_role_name="CovalentFargateTaskRole",

ecs_task_subnet_id="subnet-000000e0",

ecs_task_security_group_id="sg-0000000a",

ecs_task_log_group_name="covalent-fargate-task-logs",

vcpu=1,

memory=2

)

executor = ct.executor.AWSLambdaExecutor(

lambda_role_name="CovalentLambdaExecutionRole",

s3_bucket_name="covalent-lambda-job-resources",

timeout=60,

memory_size=512

)

Lastly, define a workflow to execute a particular task using one of the above executors

@ct.electron(

executor=executor

)

def compute_pi(n):

# Leibniz formula for π

return 4 * sum(1.0/(2*i + 1)*(-1)**i for i in range(n))

@ct.lattice

def workflow(n):

return compute_pi(n)

dispatch_id = ct.dispatch(workflow)(100000000)

result = ct.get_result(dispatch_id=dispatch_id, wait=True)

print(result.result)

Which should output

3.141592643589326

AWS Batch Executor#

Covalent is a Pythonic workflow tool used to execute tasks on advanced computing hardware.

This executor plugin interfaces Covalent with AWS Batch which allows tasks in a covalent workflow to be executed as AWS batch jobs.

Furthermore, this plugin is well suited for compute/memory intensive tasks such as training machine learning models, hyperparameter optimization, deep learning etc. With this executor, the compute backend is the Amazon EC2 service, with instances optimized for compute and memory intensive operations.

1. Installation#

To use this plugin with Covalent, simply install it using pip:

pip install covalent-awsbatch-plugin

2. Usage Example#

This is an example of how a workflow can be adapted to utilize the AWS Batch Executor. Here we train a simple Support Vector Machine (SVM) model and use an existing AWS Batch Compute environment to run the train_svm electron as a batch job. We also note we require DepsPip to install the dependencies when creating the batch job.

from numpy.random import permutation

from sklearn import svm, datasets

import covalent as ct

deps_pip = ct.DepsPip(

packages=["numpy==1.23.2", "scikit-learn==1.1.2"]

)

executor = ct.executor.AWSBatchExecutor(

s3_bucket_name = "covalent-batch-qa-job-resources",

batch_job_definition_name = "covalent-batch-qa-job-definition",

batch_queue = "covalent-batch-qa-queue",

batch_execution_role_name = "ecsTaskExecutionRole",

batch_job_role_name = "covalent-batch-qa-job-role",

batch_job_log_group_name = "covalent-batch-qa-log-group",

vcpu = 2, # Number of vCPUs to allocate

memory = 3.75, # Memory in GB to allocate

time_limit = 300, # Time limit of job in seconds

)

# Use executor plugin to train our SVM model.

@ct.electron(

executor=executor,

deps_pip=deps_pip

)

def train_svm(data, C, gamma):

X, y = data

clf = svm.SVC(C=C, gamma=gamma)

clf.fit(X[90:], y[90:])

return clf

@ct.electron

def load_data():

iris = datasets.load_iris()

perm = permutation(iris.target.size)

iris.data = iris.data[perm]

iris.target = iris.target[perm]

return iris.data, iris.target

@ct.electron

def score_svm(data, clf):

X_test, y_test = data

return clf.score(

X_test[:90],

y_test[:90]

)

@ct.lattice

def run_experiment(C=1.0, gamma=0.7):

data = load_data()

clf = train_svm(

data=data,

C=C,

gamma=gamma

)

score = score_svm(

data=data,

clf=clf

)

return score

# Dispatch the workflow

dispatch_id = ct.dispatch(run_experiment)(

C=1.0,

gamma=0.7

)

# Wait for our result and get result value

result = ct.get_result(dispatch_id=dispatch_id, wait=True).result

print(result)

During the execution of the workflow one can navigate to the UI to see the status of the workflow, once completed however the above script should also output a value with the score of our model.

0.8666666666666667

3. Overview of Configuration#

Config Key |

Is Required |

Default |

Description |

|---|---|---|---|

profile |

No |

default |

Named AWS profile used for authentication |

region |

Yes |

us-east-1 |

AWS Region to use to for client calls |

credentials |

No |

~/.aws/credentials |

The path to the AWS credentials file |

batch_queue |

Yes |

covalent-batch-queue |

Name of the Batch queue used for job management. |

s3_bucket_name |

Yes |

covalent-batch-job-resources |

Name of an S3 bucket where covalent artifacts are stored. |

batch_job_definition_name |

Yes |

covalent-batch-jobs |

Name of the Batch job definition for a user, project, or experiment. |

batch_execution_role_name |

No |

ecsTaskExecutionRole |

Name of the IAM role used by the Batch ECS agent (the above role should already exist in AWS). |

batch_job_role_name |

Yes |

CovalentBatchJobRole |

Name of the IAM role used within the container. |

batch_job_log_group_name |

Yes |

covalent-batch-job-logs |

Name of the CloudWatch log group where container logs are stored. |

vcpu |

No |

2 |

Number of vCPUs available to a task. |

memory |

No |

3.75 |

Memory (in GB) available to a task. |

num_gpus |

No |

0 |

Number of GPUs availabel to a task. |

retry_attempts |

No |

3 |

Number of times a job is retried if it fails. |

time_limit |

No |

300 |

Time limit (in seconds) after which jobs are killed. |

poll_freq |

No |

10 |

Frequency (in seconds) with which to poll a submitted task. |

cache_dir |

No |

/tmp/covalent |

Cache directory used by this executor for temporary files. |

This plugin can be configured in one of two ways:

Configuration options can be passed in as constructor keys to the executor class

ct.executor.AWSBatchExecutorBy modifying the covalent configuration file under the section

[executors.awsbatch]

The following shows an example of how a user might modify their covalent configuration file to support this plugin:

[executors.awsbatch]

s3_bucket_name = "covalent-batch-job-resources"

batch_queue = "covalent-batch-queue"

batch_job_definition_name = "covalent-batch-jobs"

batch_execution_role_name = "ecsTaskExecutionRole"

batch_job_role_name = "CovalentBatchJobRole"

batch_job_log_group_name = "covalent-batch-job-logs"

...

4. Required Cloud Resources#

In order to run your workflows with covalent there are a few notable AWS resources that need to be provisioned first.

Resource |

Is Required |

Config Key |

Description |

|---|---|---|---|

AWS S3 Bucket |

Yes |

|

S3 bucket must be created for covalent to store essential files that are needed during execution. |

VPC & Subnet |

Yes |

N/A |

A VPC must be associated with the AWS Batch Compute Environment along with a public or private subnet (there needs to be additional resources created for private subnets) |

AWS Batch Compute Environment |

Yes |

N/A |

An AWS Batch compute environment (EC2) that will provision EC2 instances as needed when jobs are submitted to the associated job queue. |

AWS Batch Queue |

Yes |

|

An AWS Batch Job Queue that will queue tasks for execution in it’s associated compute environment. |

AWS Batch Job Definition |

Yes |

|

An AWS Batch job definition that will be replaced by a new batch job definition when the workflow is executed. |

AWS IAM Role (Job Role) |

Yes |

|

The IAM role used within the container. |

AWS IAM Role (Execution Role) |

No |

|

The IAM role used by the Batch ECS agent (default role ecsTaskExecutionRole should already exist). |

Log Group |

Yes |

|

An AWS CloudWatch log group where task logs are stored. |

To create an AWS S3 Bucket refer to the following AWS documentation.

To create a VPC & Subnet refer to the following AWS documentation.

To create an AWS Batch Queue refer to the following AWS documentation it must be a compute environment configured in EC2 mode.

To create an AWS Batch Job Definition refer to the following AWS documentation the configuration for this can be trivial as covalent will update the Job Definition prior to execution.

To create an AWS IAM Role for batch jobs (Job Role) one can provision a policy with the following permissions (below) then create a new role and attach with the created policy. Refer to the following AWS documentation for an example of creating a policy & role in IAM.

AWS Batch IAM Job Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "BatchJobMgmt",

"Effect": "Allow",

"Action": [

"batch:TerminateJob",

"batch:DescribeJobs",

"batch:SubmitJob",

"batch:RegisterJobDefinition"

],

"Resource": "*"

},

{

"Sid": "ECRAuth",

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken"

],

"Resource": "*"

},

{

"Sid": "ECRUpload",

"Effect": "Allow",

"Action": [

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"ecr:BatchCheckLayerAvailability",

"ecr:InitiateLayerUpload",

"ecr:UploadLayerPart",

"ecr:CompleteLayerUpload",

"ecr:PutImage"

],

"Resource": [

"arn:aws:ecr:<region>:<account>:repository/<ecr_repo_name>"

]

},

{

"Sid": "IAMRoles",

"Effect": "Allow",

"Action": [

"iam:GetRole",

"iam:PassRole"

],

"Resource": [

"arn:aws:iam::<account>:role/CovalentBatchJobRole",

"arn:aws:iam::<account>:role/ecsTaskExecutionRole"

]

},

{

"Sid": "ObjectStore",

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:PutObject",

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::<s3_resource_bucket>/*",

"arn:aws:s3:::<s3_resource_bucket>"

]

},

{

"Sid": "LogRead",

"Effect": "Allow",

"Action": [

"logs:GetLogEvents"

],

"Resource": [

"arn:aws:logs:<region>:<account>:log-group:<cloudwatch_log_group_name>:log-stream:*"

]

}

]

}

- class covalent_awsbatch_plugin.awsbatch.AWSBatchExecutor(credentials=None, profile=None, region=None, s3_bucket_name=None, batch_queue=None, batch_execution_role_name=None, batch_job_role_name=None, batch_job_log_group_name=None, vcpu=None, memory=None, num_gpus=None, retry_attempts=None, time_limit=None, poll_freq=None, cache_dir=None, container_image_uri=None)[source]#

AWS Batch executor plugin class.

- Parameters

credentials (

Optional[str]) – Full path to AWS credentials file.profile (

Optional[str]) – Name of an AWS profile whose credentials are used.s3_bucket_name (

Optional[str]) – Name of an S3 bucket where objects are stored.batch_queue (

Optional[str]) – Name of the Batch queue used for job management.batch_execution_role_name (

Optional[str]) – Name of the IAM role used by the Batch ECS agent.batch_job_role_name (

Optional[str]) – Name of the IAM role used within the container.batch_job_log_group_name (

Optional[str]) – Name of the CloudWatch log group where container logs are stored.vcpu (

Optional[int]) – Number of vCPUs available to a task.memory (

Optional[float]) – Memory (in GB) available to a task.num_gpus (

Optional[int]) – Number of GPUs available to a task.retry_attempts (

Optional[int]) – Number of times a job is retried if it fails.time_limit (

Optional[int]) – Time limit (in seconds) after which jobs are killed.poll_freq (

Optional[int]) – Frequency with which to poll a submitted task.cache_dir (

Optional[str]) – Cache directory used by this executor for temporary files.container_image_uri (

Optional[str]) – URI of the docker container image used by the executor.

Methods:

Returns a dictionary of kwargs to populate a new boto3.Session() instance with proper auth, region, and profile options.

cancel(task_metadata, job_handle)Cancel the batch job.

from_dict(object_dict)Rehydrate a dictionary representation

Get if the task was requested to be canceled

get_dispatch_context(dispatch_info)Start a context manager that will be used to access the dispatch info for the executor.

get_status(job_id)Query the status of a previously submitted Batch job.

Query the database for dispatch version metadata.

poll(task_group_metadata, data)Block until the job has reached a terminal state.

query_result(task_metadata)Query and retrieve a completed job’s result.

receive(task_group_metadata, data)Return a list of task updates.

run(function, args, kwargs, task_metadata)Abstract method to run a function in the executor in async-aware manner.

run_async_subprocess(cmd)Invokes an async subprocess to run a command.

send(task_specs, resources, task_group_metadata)Submit a list of task references to the compute backend.

set_job_handle(handle)Save the job handle to database

set_job_status(status)Validates and sets the job state

setup(task_metadata)Executor specific setup method

submit_task(task_metadata, identity)Invokes the task on the remote backend.

teardown(task_metadata)Executor specific teardown method

to_dict()Return a JSON-serializable dictionary representation of self

validate_status(status)Overridable filter

write_streams_to_file(stream_strings, …)Write the contents of stdout and stderr to respective files.

- boto_session_options()#

Returns a dictionary of kwargs to populate a new boto3.Session() instance with proper auth, region, and profile options.

- Return type

Dict[str,str]

- async cancel(task_metadata, job_handle)[source]#

Cancel the batch job.

- Parameters

task_metadata (

Dict) – Dictionary with the task’s dispatch_id and node id.job_handle (

str) – Unique job handle assigned to the task by AWS Batch.

- Return type

bool- Returns

If the job was cancelled or not

- from_dict(object_dict)#

Rehydrate a dictionary representation

- Parameters

object_dict (

dict) – a dictionary representation returned by to_dict- Return type

- Returns

self

Instance attributes will be overwritten.

- async get_cancel_requested()#

Get if the task was requested to be canceled

- Arg(s)

None

- Return(s)

Whether the task has been requested to be cancelled

- Return type

Any

- get_dispatch_context(dispatch_info)#

Start a context manager that will be used to access the dispatch info for the executor.

- Parameters

dispatch_info (

DispatchInfo) – The dispatch info to be used inside current context.- Return type

AbstractContextManager[DispatchInfo]- Returns

A context manager object that handles the dispatch info.

- async get_status(job_id)[source]#

Query the status of a previously submitted Batch job.

- Parameters

batch – Batch client object.

job_id (

str) – Identifier used to identify a Batch job.

- Returns

String describing the task status. exit_code: Exit code, if the task has completed, else -1.

- Return type

status

- async get_version_info()#

Query the database for dispatch version metadata.

- Arg:

dispatch_id: Dispatch ID of the lattice

- Returns

python_version, “covalent”: covalent_version}

- Return type

{“python”

- async poll(task_group_metadata, data)#

Block until the job has reached a terminal state.

- Parameters

task_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.data (

Any) – The return value of send().

The return value of poll() will be passed directly into receive().

Raise NotImplementedError to indicate that the compute backend will notify the Covalent server asynchronously of job completion.

- Return type

Any

- async query_result(task_metadata)[source]#

Query and retrieve a completed job’s result.

- Parameters

task_metadata (

Dict) – Dictionary containing the task dispatch_id and node_id- Return type

Tuple[Any,str,str]- Returns

result, stdout, stderr

- async receive(task_group_metadata, data)#

Return a list of task updates.

Each task must have reached a terminal state by the time this is invoked.

- Parameters

task_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.data (

Any) – The return value of poll() or the request body of /jobs/update.

- Return type

List[TaskUpdate]- Returns

Returns a list of task results, each a TaskUpdate dataclass of the form

- {

“dispatch_id”: dispatch_id, “node_id”: node_id, “status”: status, “assets”: {

- ”output”: {

“remote_uri”: output_uri,

}, “stdout”: {

”remote_uri”: stdout_uri,

}, “stderr”: {

”remote_uri”: stderr_uri,

},

},

}

corresponding to the node ids (task_ids) specified in the task_group_metadata. This might be a subset of the node ids in the originally submitted task group as jobs may notify Covalent asynchronously of completed tasks before the entire task group finishes running.

- async run(function, args, kwargs, task_metadata)[source]#

Abstract method to run a function in the executor in async-aware manner.

- Parameters

function (

Callable) – The function to run in the executorargs (

List) – List of positional arguments to be used by the functionkwargs (

Dict) – Dictionary of keyword arguments to be used by the function.task_metadata (

Dict) – Dictionary of metadata for the task. Current keys are dispatch_id and node_id

- Returns

The result of the function execution

- Return type

output

- async static run_async_subprocess(cmd)#

Invokes an async subprocess to run a command.

- Return type

Tuple

- async send(task_specs, resources, task_group_metadata)#

Submit a list of task references to the compute backend.

- Parameters

task_specs (

List[TaskSpec]) – a list of TaskSpecsresources (

ResourceMap) – a ResourceMap mapping task assets to URIstask_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.

The return value of send() will be passed directly into poll().

- Return type

Any

- async set_job_handle(handle)#

Save the job handle to database

- Arg(s)

handle: JSONable type identifying the job being executed by the backend

- Return(s)

Response from the listener that handles inserting the job handle to database

- Return type

Any

- async set_job_status(status)#

Validates and sets the job state

For use with send/receive API

- Return(s)

Whether the action succeeded

- Return type

bool

- async setup(task_metadata)#

Executor specific setup method

- async submit_task(task_metadata, identity)[source]#

Invokes the task on the remote backend.

- Parameters

task_metadata (

Dict) – Dictionary of metadata for the task. Current keys are dispatch_id and node_id.identity (

Dict) – Dictionary from _validate_credentials call { “Account”: “AWS Account ID”, …}

- Returns

Task UUID defined on the remote backend.

- Return type

task_uuid

- async teardown(task_metadata)#

Executor specific teardown method

- to_dict()#

Return a JSON-serializable dictionary representation of self

- Return type

dict

- validate_status(status)#

Overridable filter

- Return type

bool

- async write_streams_to_file(stream_strings, filepaths, dispatch_id, results_dir)#

Write the contents of stdout and stderr to respective files.

- Parameters

stream_strings (

Iterable[str]) – The stream_strings to be written to files.filepaths (

Iterable[str]) – The filepaths to be used for writing the streams.dispatch_id (

str) – The ID of the dispatch which initiated the request.results_dir (

str) – The location of the results directory.

This uses aiofiles to avoid blocking the event loop.

- Return type

None

AWS Braket Executor#

Covalent is a Pythonic workflow tool used to execute tasks on advanced computing hardware.

This plugin allows executing quantum circuits and quantum-classical hybrid jobs in Amazon Braket when you use Covalent.

1. Installation#

To use this plugin with Covalent, simply install it using pip:

pip install covalent-braket-plugin

2. Usage Example#

The following toy example executes a simple quantum circuit on one qubit that prepares a uniform superposition of the standard basis states and then measures the state. We use the Pennylane framework.

import covalent as ct

from covalent_braket_plugin.braket import BraketExecutor

import os

# AWS resources to pass to the executor

credentials = "~/.aws/credentials"

profile = "default"

region = "us-east-1"

s3_bucket_name = "braket_s3_bucket"

ecr_repo_name = "braket_ecr_repo"

iam_role_name = "covalent-braket-iam-role"

# Instantiate the executor

ex = BraketExecutor(

profile=profile,

credentials=credentials_file,

s3_bucket_name=s3_bucket_name,

ecr_image_uri=ecr_image_uri,

braket_job_execution_role_name=iam_role_name,

quantum_device="arn:aws:braket:::device/quantum-simulator/amazon/sv1",

classical_device="ml.m5.large",

storage=30,

time_limit=300,

)

# Execute the following circuit:

# |0> - H - Measure

@ct.electron(executor=ex)

def simple_quantum_task(num_qubits: int):

import pennylane as qml

# These are passed to the Hybrid Jobs container at runtime

device_arn = os.environ["AMZN_BRAKET_DEVICE_ARN"]

s3_bucket = os.environ["AMZN_BRAKET_OUT_S3_BUCKET"]

s3_task_dir = os.environ["AMZN_BRAKET_TASK_RESULTS_S3_URI"].split(s3_bucket)[1]

device = qml.device(

"braket.aws.qubit",

device_arn=device_arn,

s3_destination_folder=(s3_bucket, s3_task_dir),

wires=num_qubits,

)

@qml.qnode(device=device)

def simple_circuit():

qml.Hadamard(wires=[0])

return qml.expval(qml.PauliZ(wires=[0]))

res = simple_circuit().numpy()

return res

@ct.lattice

def simple_quantum_workflow(num_qubits: int):

return simple_quantum_task(num_qubits=num_qubits)

dispatch_id = ct.dispatch(simple_quantum_workflow)(1)

result_object = ct.get_result(dispatch_id, wait=True)

# We expect 0 as the result

print("Result:", result_object.result)

During the execution of the workflow one can navigate to the UI to see the status of the workflow, once completed however the above script should also output a value with the output of the quantum measurement.

>>> Result: 0

3. Overview of Configuration#

Config Key |

Is Required |

Default |

Description |

|---|---|---|---|

credentials |

No |

“~/.aws/credentials” |

The path to the AWS credentials file |

braket_job_execution_role_name |

Yes |

“CovalentBraketJobsExecutionRole” |

The name of the IAM role that Braket will assume during task execution. |

profile |

No |

“default” |

Named AWS profile used for authentication |

region |

Yes |

:code`AWS_DEFAULT_REGION` environment variable |

AWS Region to use to for client calls to AWS |

s3_bucket_name |

Yes |

amazon-braket-covalent-job-resources |

The S3 bucket where Covalent will store input and output files for the task. |

ecr_image_uri |

Yes |

An ECR repository for storing container images to be run by Braket. |

|

quantum_device |

No |

“arn:aws:braket:::device/quantum-simulator/amazon/sv1” |

The ARN of the quantum device to use |

classical_device |

No |

“ml.m5.large” |

Instance type for the classical device to use |

storage |

No |

30 |

Storage size in GB for the classical device |

time_limit |

No |

300 |

Max running time in seconds for the Braket job |

poll_freq |

No |

30 |

How often (in seconds) to poll Braket for the job status |

cache_dir |

No |

“/tmp/covalent” |

Location for storing temporary files generated by the Covalent server |

This plugin can be configured in one of two ways:

Configuration options can be passed in as constructor keys to the executor class

ct.executor.BraketExecutorBy modifying the covalent configuration file under the section

[executors.braket]

The following shows an example of how a user might modify their covalent configuration file to support this plugin:

[executors.braket]

quantum_device = "arn:aws:braket:::device/qpu/ionq/ionQdevice"

time_limit = 3600

4. Required Cloud Resources#

The Braket executor requires some resources to be provisioned on AWS. Precisely, users will need an S3 bucket, an ECR repo, and an IAM role with the appropriate permissions to be passed to Braket.

Resource |

Is Required |

Config Key |

Description |

|---|---|---|---|

IAM role |

Yes |

|

An IAM role granting permissions to Braket, S3, ECR, and a few other resources. |

ECR repository |

Yes |

|

An ECR repository for storing container images to be run by Braket. |

S3 bucket |

Yes |

|

An S3 bucket for storing task-specific data, such as Braket outputs or function inputs. |

One can either follow the below instructions to manually create the resources or use the provided terraform script to auto-provision the resources needed.

The AWS documentation on S3 details how to configure an S3 bucket.

The permissions required for the the IAM role are documented in the article “managing access to Amazon Braket”. The following policy is attached to the default role “CovalentBraketJobsExecutionRole”:

In order to use the Braket executor plugin one must create a private ECR registry with a container image that will be used to execute the Braket jobs using covalent. One can either create an ECR repository manually or use the terraform script provided below. We host the image in our public repository at

public.ecr.aws/covalent/covalent-braket-executor:stable

Note

The container image can be uploaded to a private ECR as follows

docker pull public.ecr.aws/covalent/covalent-braket-executor:stable

Once the image has been obtained, user’s can tag it with their registry information and upload to ECR as follows

aws ecr get-login-password --region <region> | docker login --username AWS --password-stdin <aws_account_id>.dkr.ecr.<region>.amazonaws.com

docker tag public.ecr.aws/covalent/covalent-braket-executor:stable <aws_account_id>.dkr.ecr.<region>.amazonaws.com/<my-repository>:tag

docker push <aws_account_id>.dkr.ecr.<region>.amazonaws.com/<my-repository>:tag

Sample IAM policy for Braket’s execution role

- {

“Version”: “2012-10-17”, “Statement”: [

- {

“Sid”: “VisualEditor0”, “Effect”: “Allow”, “Action”: “cloudwatch:PutMetricData”, “Resource”: “*”, “Condition”: {

“StringEquals”: { “cloudwatch:namespace”: “/aws/braket” }

}

}, {

“Sid”: “VisualEditor1”, “Effect”: “Allow”, “Action”: [

“logs:CreateLogStream”, “logs:DescribeLogStreams”, “ecr:GetDownloadUrlForLayer”, “ecr:BatchGetImage”, “logs:StartQuery”, “logs:GetLogEvents”, “logs:CreateLogGroup”, “logs:PutLogEvents”, “ecr:BatchCheckLayerAvailability”

], “Resource”: [

“arn:aws:ecr::348041629502:repository/”, “arn:aws:logs:::log-group:/aws/braket*”

]

}, {

“Sid”: “VisualEditor2”, “Effect”: “Allow”, “Action”: “iam:PassRole”, “Resource”: “arn:aws:iam::348041629502:role/CovalentBraketJobsExecutionRole”, “Condition”: {

“StringLike”: { “iam:PassedToService”: “braket.amazonaws.com” }

}

}, {

“Sid”: “VisualEditor3”, “Effect”: “Allow”, “Action”: [

“braket:SearchDevices”, “s3:CreateBucket”, “ecr:BatchDeleteImage”, “ecr:BatchGetRepositoryScanningConfiguration”, “ecr:DeleteRepository”, “ecr:TagResource”, “ecr:BatchCheckLayerAvailability”, “ecr:GetLifecyclePolicy”, “braket:CreateJob”, “ecr:DescribeImageScanFindings”, “braket:GetJob”, “ecr:CreateRepository”, “ecr:PutImageScanningConfiguration”, “ecr:GetDownloadUrlForLayer”, “ecr:DescribePullThroughCacheRules”, “ecr:GetAuthorizationToken”, “ecr:DeleteLifecyclePolicy”, “braket:ListTagsForResource”, “ecr:PutImage”, “s3:PutObject”, “s3:GetObject”, “braket:GetDevice”, “ecr:UntagResource”, “ecr:BatchGetImage”, “ecr:DescribeImages”, “braket:CancelQuantumTask”, “ecr:StartLifecyclePolicyPreview”, “braket:CancelJob”, “ecr:InitiateLayerUpload”, “ecr:PutImageTagMutability”, “ecr:StartImageScan”, “ecr:DescribeImageReplicationStatus”, “ecr:ListTagsForResource”, “s3:ListBucket”, “ecr:UploadLayerPart”, “ecr:CreatePullThroughCacheRule”, “ecr:ListImages”, “ecr:GetRegistryScanningConfiguration”, “braket:TagResource”, “ecr:CompleteLayerUpload”, “ecr:DescribeRepositories”, “ecr:ReplicateImage”, “ecr:GetRegistryPolicy”, “ecr:PutLifecyclePolicy”, “s3:PutBucketPublicAccessBlock”, “ecr:GetLifecyclePolicyPreview”, “ecr:DescribeRegistry”, “braket:SearchJobs”, “braket:CreateQuantumTask”, “iam:ListRoles”, “ecr:PutRegistryScanningConfiguration”, “ecr:DeletePullThroughCacheRule”, “braket:UntagResource”, “ecr:BatchImportUpstreamImage”, “braket:GetQuantumTask”, “s3:PutBucketPolicy”, “braket:SearchQuantumTasks”, “ecr:GetRepositoryPolicy”, “ecr:PutReplicationConfiguration”

], “Resource”: “*”

}, {

“Sid”: “VisualEditor4”, “Effect”: “Allow”, “Action”: “logs:GetQueryResults”, “Resource”: “arn:aws:logs:::log-group:*”

}, {

“Sid”: “VisualEditor5”, “Effect”: “Allow”, “Action”: “logs:StopQuery”, “Resource”: “arn:aws:logs:::log-group:/aws/braket*”

}

]

}

Users can use the following Terraform snippet as a starting point to spin up the required resources

provider "aws" {}

data "aws_caller_identity" "current" {}

resource "aws_s3_bucket" "braket_bucket" {

bucket = "my-s3-bucket-name"

force_destroy = true

}

resource "aws_ecr_repository" "braket_ecr_repo" {

name = "amazon-braket-base-executor-repo"

image_tag_mutability = "MUTABLE"

force_delete = true

image_scanning_configuration {

scan_on_push = false

}

provisioner "local-exec" {

command = "docker pull public.ecr.aws/covalent/covalent-braket-executor:stable && aws ecr get-login-password --region <region> | docker login --username AWS --password-stdin ${data.aws_caller_identity.current.account_id}.dkr.ecr.${var.aws_region}.amazonaws.com && docker tag public.ecr.aws/covalent/covalent-braket-executor:stable ${aws_ecr_repository.braket_ecr_repo.repository_url}:stable && docker push ${aws_ecr_repository.braket_ecr_repo.repository_url}:stable"

}

}

resource "aws_iam_role" "braket_iam_role" {

name = "amazon-braket-execution-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

Service = "braket.amazonaws.com"

}

},

]

})

managed_policy_arns = ["arn:aws:iam::aws:policy/AmazonBraketFullAccess"]

}

- class covalent_braket_plugin.braket.BraketExecutor(ecr_image_uri=None, s3_bucket_name=None, braket_job_execution_role_name=None, classical_device=None, storage=None, time_limit=None, poll_freq=None, quantum_device=None, profile=None, credentials=None, cache_dir=None, region=None, **kwargs)[source]#

AWS Braket Hybrid Jobs executor plugin class.

Methods:

Returns a dictionary of kwargs to populate a new boto3.Session() instance with proper auth, region, and profile options.

cancel()Abstract method that sends a cancellation request to the remote backend.

from_dict(object_dict)Rehydrate a dictionary representation

Get if the task was requested to be canceled

get_dispatch_context(dispatch_info)Start a context manager that will be used to access the dispatch info for the executor.

get_status(braket, job_arn)Query the status of a previously submitted Braket hybrid job.

Query the database for dispatch version metadata.

poll(task_group_metadata, data)Block until the job has reached a terminal state.

query_result(query_metadata)Abstract method that retrieves the pickled result from the remote cache.

receive(task_group_metadata, data)Return a list of task updates.

run(function, args, kwargs, task_metadata)Abstract method to run a function in the executor in async-aware manner.

run_async_subprocess(cmd)Invokes an async subprocess to run a command.

send(task_specs, resources, task_group_metadata)Submit a list of task references to the compute backend.

set_job_handle(handle)Save the job handle to database

set_job_status(status)Validates and sets the job state

setup(task_metadata)Executor specific setup method

submit_task(submit_metadata)Abstract method that invokes the task on the remote backend.

teardown(task_metadata)Executor specific teardown method

to_dict()Return a JSON-serializable dictionary representation of self

validate_status(status)Overridable filter

write_streams_to_file(stream_strings, …)Write the contents of stdout and stderr to respective files.

- boto_session_options()#

Returns a dictionary of kwargs to populate a new boto3.Session() instance with proper auth, region, and profile options.

- Return type

Dict[str,str]

- async cancel()[source]#

Abstract method that sends a cancellation request to the remote backend.

- Return type

bool

- from_dict(object_dict)#

Rehydrate a dictionary representation

- Parameters

object_dict (

dict) – a dictionary representation returned by to_dict- Return type

- Returns

self

Instance attributes will be overwritten.

- async get_cancel_requested()#

Get if the task was requested to be canceled

- Arg(s)

None

- Return(s)

Whether the task has been requested to be cancelled

- Return type

Any

- get_dispatch_context(dispatch_info)#

Start a context manager that will be used to access the dispatch info for the executor.

- Parameters

dispatch_info (

DispatchInfo) – The dispatch info to be used inside current context.- Return type

AbstractContextManager[DispatchInfo]- Returns

A context manager object that handles the dispatch info.

- async get_status(braket, job_arn)[source]#

Query the status of a previously submitted Braket hybrid job.

- Parameters

braket – Braket client object.

job_arn (

str) – ARN used to identify a Braket hybrid job.

- Returns

String describing the job status.

- Return type

status

- async get_version_info()#

Query the database for dispatch version metadata.

- Arg:

dispatch_id: Dispatch ID of the lattice

- Returns

python_version, “covalent”: covalent_version}

- Return type

{“python”

- async poll(task_group_metadata, data)#

Block until the job has reached a terminal state.

- Parameters

task_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.data (

Any) – The return value of send().

The return value of poll() will be passed directly into receive().

Raise NotImplementedError to indicate that the compute backend will notify the Covalent server asynchronously of job completion.

- Return type

Any

- async query_result(query_metadata)[source]#

Abstract method that retrieves the pickled result from the remote cache.

- Return type

Any

- async receive(task_group_metadata, data)#

Return a list of task updates.

Each task must have reached a terminal state by the time this is invoked.

- Parameters

task_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.data (

Any) – The return value of poll() or the request body of /jobs/update.

- Return type

List[TaskUpdate]- Returns

Returns a list of task results, each a TaskUpdate dataclass of the form

- {

“dispatch_id”: dispatch_id, “node_id”: node_id, “status”: status, “assets”: {

- ”output”: {

“remote_uri”: output_uri,

}, “stdout”: {

”remote_uri”: stdout_uri,

}, “stderr”: {

”remote_uri”: stderr_uri,

},

},

}

corresponding to the node ids (task_ids) specified in the task_group_metadata. This might be a subset of the node ids in the originally submitted task group as jobs may notify Covalent asynchronously of completed tasks before the entire task group finishes running.

- async run(function, args, kwargs, task_metadata)[source]#

Abstract method to run a function in the executor in async-aware manner.

- Parameters

function (

Callable) – The function to run in the executorargs (

List) – List of positional arguments to be used by the functionkwargs (

Dict) – Dictionary of keyword arguments to be used by the function.task_metadata (

Dict) – Dictionary of metadata for the task. Current keys are dispatch_id and node_id

- Returns

The result of the function execution

- Return type

output

- async static run_async_subprocess(cmd)#

Invokes an async subprocess to run a command.

- Return type

Tuple

- async send(task_specs, resources, task_group_metadata)#

Submit a list of task references to the compute backend.

- Parameters

task_specs (

List[TaskSpec]) – a list of TaskSpecsresources (

ResourceMap) – a ResourceMap mapping task assets to URIstask_group_metadata (

Dict) – A dictionary of metadata for the task group. Current keys are dispatch_id, node_ids, and task_group_id.

The return value of send() will be passed directly into poll().

- Return type

Any

- async set_job_handle(handle)#

Save the job handle to database

- Arg(s)

handle: JSONable type identifying the job being executed by the backend

- Return(s)

Response from the listener that handles inserting the job handle to database

- Return type

Any

- async set_job_status(status)#

Validates and sets the job state

For use with send/receive API

- Return(s)

Whether the action succeeded

- Return type

bool

- async setup(task_metadata)#

Executor specific setup method

- async submit_task(submit_metadata)[source]#

Abstract method that invokes the task on the remote backend.

- Parameters

task_metadata – Dictionary of metadata for the task. Current keys are dispatch_id and node_id.

- Returns

Task UUID defined on the remote backend.

- Return type

task_uuid

- async teardown(task_metadata)#

Executor specific teardown method

- to_dict()#

Return a JSON-serializable dictionary representation of self

- Return type

dict

- validate_status(status)#

Overridable filter

- Return type

bool

- async write_streams_to_file(stream_strings, filepaths, dispatch_id, results_dir)#

Write the contents of stdout and stderr to respective files.

- Parameters

stream_strings (

Iterable[str]) – The stream_strings to be written to files.filepaths (

Iterable[str]) – The filepaths to be used for writing the streams.dispatch_id (

str) – The ID of the dispatch which initiated the request.results_dir (

str) – The location of the results directory.

This uses aiofiles to avoid blocking the event loop.

- Return type

None

AWS EC2 Executor#

Covalent is a Pythonic workflow tool used to execute tasks on advanced computing hardware.

This plugin allows tasks to be executed in an AWS EC2 instance (which is auto-created) when you execute your workflow with covalent.

1. Installation#

To use this plugin with Covalent, simply install it using pip:

pip install covalent-ec2-plugin

Note

Users will also need to have Terraform installed on their local machine in order to use this plugin.

2. Usage Example#

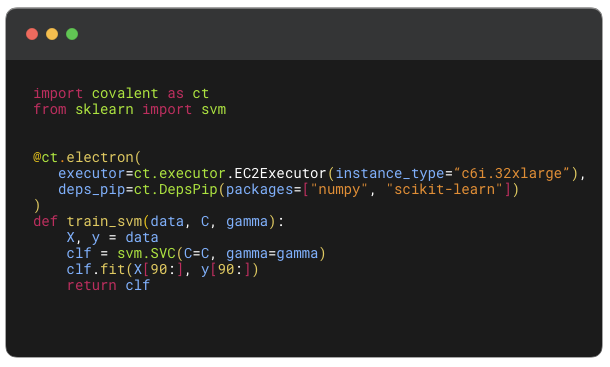

This is a toy example of how a workflow can be adapted to utilize the EC2 Executor. Here we train a Support Vector Machine (SVM) and spin up an EC2 automatically to execute the train_svm electron. We also note we require DepsPip to install the dependencies on the EC2 instance.

from numpy.random import permutation

from sklearn import svm, datasets

import covalent as ct

deps_pip = ct.DepsPip(

packages=["numpy==1.23.2", "scikit-learn==1.1.2"]

)

executor = ct.executor.EC2Executor(

instance_type="t2.micro",

volume_size=8, #GiB

ssh_key_file="~/.ssh/ec2_key" # default key_name will be "ec2_key"

)

# Use executor plugin to train our SVM model.

@ct.electron(

executor=executor,

deps_pip=deps_pip

)

def train_svm(data, C, gamma):

X, y = data

clf = svm.SVC(C=C, gamma=gamma)

clf.fit(X[90:], y[90:])

return clf

@ct.electron

def load_data():

iris = datasets.load_iris()

perm = permutation(iris.target.size)

iris.data = iris.data[perm]

iris.target = iris.target[perm]

return iris.data, iris.target

@ct.electron

def score_svm(data, clf):

X_test, y_test = data

return clf.score(

X_test[:90],

y_test[:90]

)

@ct.lattice

def run_experiment(C=1.0, gamma=0.7):

data = load_data()

clf = train_svm(

data=data,

C=C,

gamma=gamma

)

score = score_svm(

data=data,

clf=clf

)

return score

# Dispatch the workflow

dispatch_id = ct.dispatch(run_experiment)(

C=1.0,

gamma=0.7

)

# Wait for our result and get result value

result = ct.get_result(dispatch_id=dispatch_id, wait=True).result

print(result)

During the execution of the workflow one can navigate to the UI to see the status of the workflow, once completed however the above script should also output a value with the score of our model.

0.8666666666666667

3. Overview of Configuration#

Config Key |

Is Required |

Default |

Description |

|---|---|---|---|

profile |

No |

default |

Named AWS profile used for authentication |

region |

No |

us-east-1 |

AWS Region to use to for client calls to AWS |

credentials_file |

Yes |

~/.aws/credentials |

The path to the AWS credentials file |

ssh_key_file |

Yes |

~/.ssh/id_rsa |

The path to the private key that corresponds to the EC2 Key Pair |

instance_type |

Yes |

t2.micro |

The EC2 instance type that will be spun up automatically. |

key_name |

Yes |

Name of key specified in |

The name of the AWS EC2 Key Pair that will be used to SSH into EC2 instance |

volume_size |

No |

8 |

The size in GiB of the GP2 SSD disk to be provisioned with EC2 instance. |

vpc |

No |

(Auto created) |

The VPC ID that will be associated with the EC2 instance, if not specified a VPC will be created. |

subnet |

No |

(Auto created) |

The Subnet ID that will be associated with the EC2 instance, if not specified a public Subnet will be created. |

remote_cache |

No |

~/.cache/covalent |

The location on the EC2 instance where covalent artifacts will be created. |

This plugin can be configured in one of two ways:

Configuration options can be passed in as constructor keys to the executor class

ct.executor.EC2ExecutorBy modifying the covalent configuration file under the section

[executors.ec2]

The following shows an example of how a user might modify their covalent configuration file to support this plugin:

[executors.ec2]

ssh_key_file = "/home/user/.ssh/ssh_key.pem"

key_name = "ssh_key"

4. Required Cloud Resources#

This plugin requires users have an AWS account. New users can follow instructions here to create a new account. In order to run workflows with Covalent and the AWS EC2 plugin, there are a few notable resources that need to be provisioned first. Whenever interacting with AWS resources, users strongly recommended to follow best practices for managing cloud credentials. Users are recommended to follow the principle of least privilege. For this executor, users who wish to deploy required infrastructure may use the AWS Managed Policy AmazonEC2FullAccess although some administrators may wish to further restrict instance families, regions, or other options according to their organization’s cloud policies.

The required resources include an EC2 Key Pair, and optionally a VPC & Subnet that can be used instead of the EC2 executor automatically creating it.

Resource |

Is Required |

Config Key |

Description |

|---|---|---|---|

AWS EC2 Key Pair |

Yes |

|

An EC2 Key Pair must be created and named corresponding to the |

VPC |

No |

|

A VPC ID can be provided corresponding to the |

Subnet |

No |

|

A Subnet ID can be provided corresponding to the |

Security Group |

No |

(Auto Created) |

A security group will be auto created and attached to the VPC in order to give the local machine (dispatching workflow) SSH access to the EC2 instance. |

EC2 Instance |

No |

(Auto Created) |

An EC2 Instance will be automatically provisioned for each electron in the workflow that utilizes this executor. |

To create an AWS EC2 Key pair refer to the following AWS documentation.

To create a VPC & Subnet refer to the following AWS documentation.

When tasks are run using this executor, the following infrastructure is ephemerally deployed.

This includes the minimal infrastructure needed to deploy an EC2 instance in a public subnet connected to an internet gateway. Users can validate that resources are correctly provisioned by monitoring the EC2 dashboard in the AWS Management Console. The overhead added by using this executor is on the order of several minutes, depending on the complexity of any additional user-specified runtime dependencies. Users are advised not to use any sensitive data with this executor without careful consideration of security policies. By default, data in transit is cached on the EBS volume attached to the EC2 instance in an unencrypted format.

These resources are torn down upon task completion and not shared across tasks in a workflow. Deployment of these resources will incur charges for EC2 alone; refer to AWS EC2 pricing for details. Note that this can be deployed in any AWS region in which the user is otherwise able to deploy EC2 instances. Some users may encounter quota limits when using EC2; this can be addressed by opening a support ticket with AWS.

AWS ECS Executor#

With this executor, users can execute tasks (electrons) or entire lattices using the AWS Elastic Container Service (ECS). This executor plugin is well suited for low to medium compute intensive electrons with modest memory requirements. Since AWS ECS offers very quick spin up times, this executor is a good fit for workflows with a large number of independent tasks that can be dispatched simultaneously.

To use this plugin with Covalent, simply install it using pip:

pip install covalent-ecs-plugin

This is an example of how a workflow can be constructed to use the AWS ECS executor. In the example, we join two words to form a phrase and return an excited phrase.

import covalent as ct

executor = ct.executor.ECSExecutor(

s3_bucket_name="covalent-fargate-task-resources",

ecr_repo_name="covalent-fargate-task-images",

ecs_cluster_name="covalent-fargate-cluster",

ecs_task_family_name="covalent-fargate-tasks",

ecs_task_execution_role_name="ecsTaskExecutionRole",

ecs_task_role_name="CovalentFargateTaskRole",

ecs_task_subnet_id="subnet-871545e1",

ecs_task_security_group_id="sg-0043541a",

ecs_task_log_group_name="covalent-fargate-task-logs",

vcpu=1,

memory=2,

poll_freq=10,

)

@ct.electron(executor=executor)

def join_words(a, b):

return ", ".join([a, b])

@ct.electron(executor=executor)

def excitement(a):

return f"{a}!"

@ct.lattice

def simple_workflow(a, b):

phrase = join_words(a, b)

return excitement(phrase)

dispatch_id = ct.dispatch(simple_workflow)("Hello", "World")

result = ct.get_result(dispatch_id, wait=True)

print(result)

During the execution of the workflow, one can navigate to the UI to see the status of the workflow. Once completed, the above script should also output the result:

Hello, World

In order for the above workflow to run successfully, one has to provision the required AWS resources as mentioned in 4. Required AWS Resources.

The following table shows a list of all input arguments including the required arguments to be supplied when instantiating the executor:

Config Value |

Is Required |

Default |

Description |

|---|---|---|---|

credentials |

No |

~/.aws/credentials |

The path to the AWS credentials file |

profile |

No |

default |

The AWS profile used for authentication |

region |

Yes |

us-east-1 |

AWS region to use for client calls to AWS |

s3_bucket_name |

No |

covalent-fargate-task-resources |

The name of the S3 bucket where objects are stored |

ecr_repo_name |

No |

covalent-fargate-task-images |

The name of the ECR repository where task images are stored |

ecs_cluster_name |

No |

covalent-fargate-cluster |

The name of the ECS cluster on which tasks run |

ecs_task_family_name |

No |

covalent-fargate-tasks |

The name of the ECS task family for a user, project, or experiment. |

ecs_task_execution_role_name |

No |

CovalentFargateTaskRole |

The IAM role used by the ECS agent |

ecs_task_role_name |

No |

CovalentFargateTaskRole |

The IAM role used by the container during runtime |

ecs_task_subnet_id |

Yes |

Valid subnet ID |

|

ecs_task_security_group_id |

Yes |

Valid security group ID |

|

ecs_task_log_group_name |

No |

covalent-fargate-task-logs |

The name of the CloudWatch log group where container logs are stored |

vcpu |

No |

0.25 |

The number of vCPUs available to a task |

memory |

No |

0.5 |

The memory (in GB) available to a task |

poll_freq |

No |

10 |

The frequency (in seconds) with which to poll a submitted task |

cache_dir |

No |

/tmp/covalent |

The cache directory used by the executor for storing temporary files |

The following snippet shows how users may modify their Covalent configuration to provide the necessary input arguments to the executor:

[executors.ecs]

credentials = "~/.aws/credentials"

profile = "default"

s3_bucket_name = "covalent-fargate-task-resources"

ecr_repo_name = "covalent-fargate-task-images"

ecs_cluster_name = "covalent-fargate-cluster"

ecs_task_family_name = "covalent-fargate-tasks"

ecs_task_execution_role_name = "ecsTaskExecutionRole"

ecs_task_role_name = "CovalentFargateTaskRole"

ecs_task_subnet_id = "<my-subnet-id>"

ecs_task_security_group_id = "<my-security-group-id>"

ecs_task_log_group_name = "covalent-fargate-task-logs"

vcpu = 0.25

memory = 0.5

cache_dir = "/tmp/covalent"

poll_freq = 10

Within a workflow, users can use this executor with the default values configured in the configuration file as follows:

import covalent as ct

@ct.electron(executor="ecs")

def task(x, y):

return x + y

Alternatively, users can customize this executor entirely by providing their own values to its constructor as follows:

import covalent as ct

from covalent.executor import ECSExecutor

ecs_executor = ECSExecutor(

credentials="my_custom_credentials",

profile="my_custom_profile",

s3_bucket_name="my_s3_bucket",

ecr_repo_name="my_ecr_repo",

ecs_cluster_name="my_ecs_cluster",

ecs_task_family_name="my_custom_task_family",

ecs_task_execution_role_name="myCustomTaskExecutionRole",

ecs_task_role_name="myCustomTaskRole",

ecs_task_subnet_id="my-subnet-id",

ecs_task_security_group_id="my-security-group-id",

ecs_task_log_group_name="my-task-log-group",

vcpu=1,

memory=2,

cache_dir="/home/<user>/covalent/cache",

poll_freq=10,

)

@ct.electron(executor=ecs_executor)

def task(x, y):

return x + y

This executor uses different AWS services (S3, ECR, ECS, and Fargate) to successfully run a task. In order for the executor to work end-to-end, the following resources need to be configured either with Terraform or manually provisioned on the AWS Dashboard

Resource |

Config Name |

Description |

|---|---|---|

IAM Role |

ecs_task_execution_role_name |

The IAM role used by the ECS agent |

IAM Role |

ecs_task_role_name |

The IAM role used by the container during runtime |

S3 Bucket |

s3_bucket_name |

The name of the S3 bucket where objects are stored |

ECR repository |

ecr_repo_name |

The name of the ECR repository where task images are stored |

ECS Cluster |

ecs_cluster_name |

The name of the ECS cluster on which tasks run |

ECS Task Family |

ecs_task_family_name |

The name of the task family that specifies container information for a user, project, or experiment |

VPC Subnet |

ecs_task_subnet_id |

The ID of the subnet where instances are created |

Security group |

ecs_task_security_group_id |

The ID of the security group for task instances |

Cloudwatch log group |

ecs_task_log_group_name |

The name of the CloudWatch log group where container logs are stored |

CPU |

vCPU |

The number of vCPUs available to a task |

Memory |

memory |

The memory (in GB) available to a task |

The following IAM roles and policies must be properly configured so that the executor has all the necessary permissions to interact with the different AWS services:

ecs_task_execution_role_nameis the IAM role used by the ECS agentecs_task_role_nameis the IAM role used by the container during runtime

If omitted, these IAM role names default to ecsTaskExecutionRole and CovalentFargateTaskRole, respectively.

The IAM policy attached to the ecsTaskExecutionRole is the following:

ECS Task Execution Role IAM Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

}

]

}

These policies allow the service to download container images from ECR so that the tasks can be executed on an ECS

cluster. The policy attached to the CovalentFargateTaskRole is as follows

AWS Fargate Task Role IAM Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "braket:*",

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::covalent-fargate-task-resources/*",

"arn:aws:s3:::covalent-fargate-task-resources"

]

}

]

}

Users can provide their custom IAM roles/policies as long as they respect the permissions listed in the above documents. For more information on how to create IAM roles and attach policies in AWS, refer to IAM roles.

The executor also requires a proper networking setup so that the containers can be properly launched into their respective

subnets. The executor requires that the user provide a subnet ID and a security group ID prior to using the executor

in a workflow.

The executor uses Docker to build container images with the task function code baked into the image. The resulting image is pushed into the elastic container registry provided by the user. Following this, an ECS task definition using the user provided arguments is registered and the corresponding task container is launched. The output from the task is uploaded to the S3 bucket provided by the user and parsed to obtain the result object. In order for the executor to properly run and build images, users must have Docker installed and properly configured on their machines.

AWS Lambda Executor#

With this executor, users can execute tasks (electrons) or entire lattices using the AWS Lambda serverless compute service. It is appropriate to use this plugin for electrons that are expected to be short lived, low in compute intensity. This plugin can also be used for workflows with a high number of electrons that are embarassingly parallel (fully independent of each other).

The following AWS resources are required by this executor

Container based AWS Lambda function

AWS S3 bucket for caching objects

IAM role for Lambda

ECR container registry for storing docker images

To use this plugin with Covalent, simply install it using pip:

pip install covalent-awslambda-plugin

Note

Due to the isolated nature of AWS Lambda, the packages available on that environment are limited. This means that only the modules that come with python out-of-the-box are accessible to your function. Deps are also limited in a similar fashion. However, AWS does provide a workaround for pip package installations: https://aws.amazon.com/premiumsupport/knowledge-center/lambda-python-package-compatible/.

This is an example of how a workflow can be constructed to use the AWS Lambda executor. In the example, we join two words to form a phrase and return an excited phrase.